Marginal potential

If Potential is a tension between what is and what could be, modeled as a distribution of Value outcomes in a given frame , a way to conceptualize the potential in a thing is in terms of a difference or change it induces in our frame.

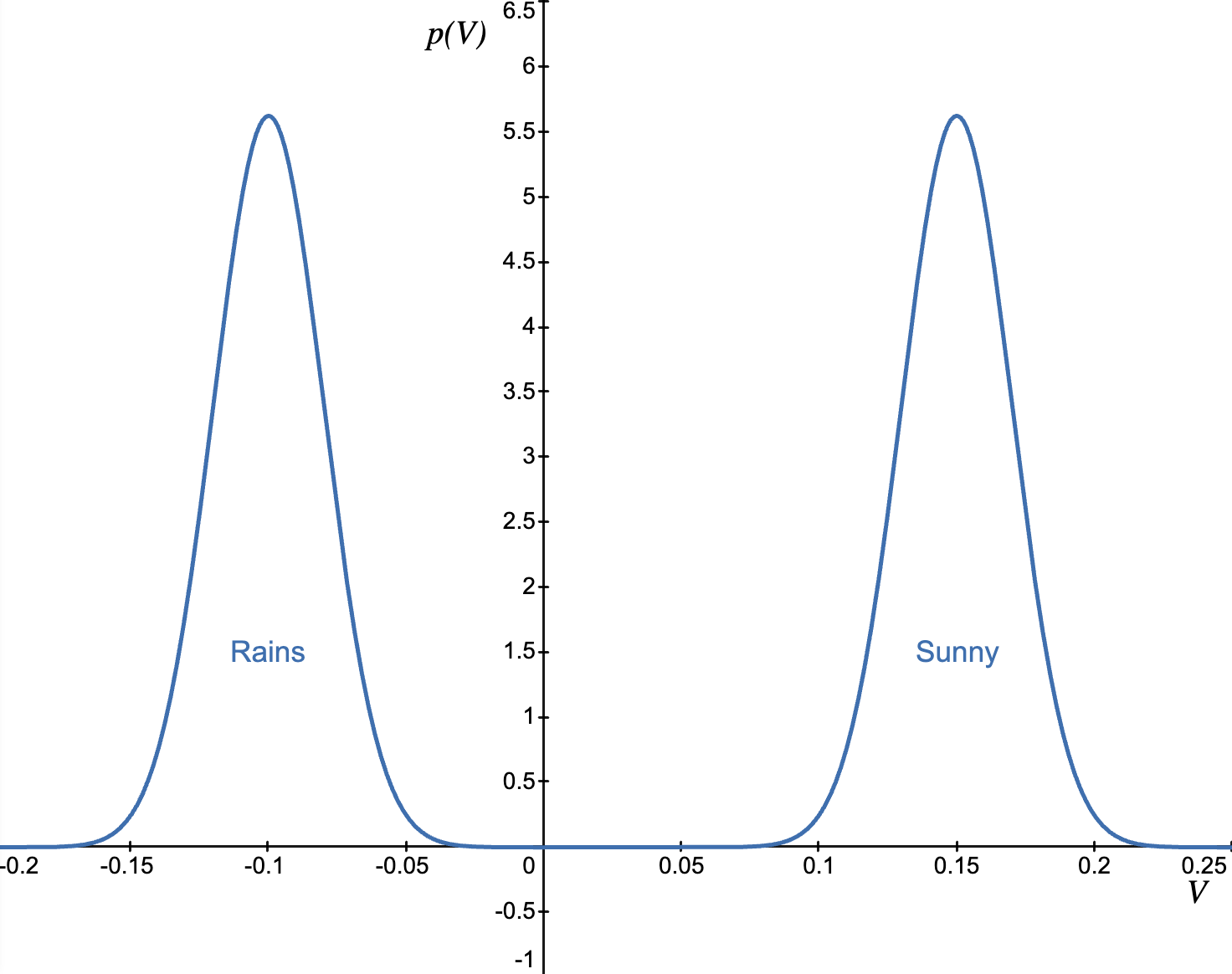

Consider a frame of going out for a walk. You know there's 50% chance it will rain. Do you take the umbrella or not? You can imagine two counterfactuals: one where you take the umbrella, and one where you don't.

Below is the potential of the frame without the umbrella. We can see a bimodal distribution of Value outcomes. If it ends up raining, varying degrees of negative Value outcomes can be expected (getting wet, cold, ill), which is modeled by the left hump. The right hump models the distribution of expected positive outcomes when the weather is good.

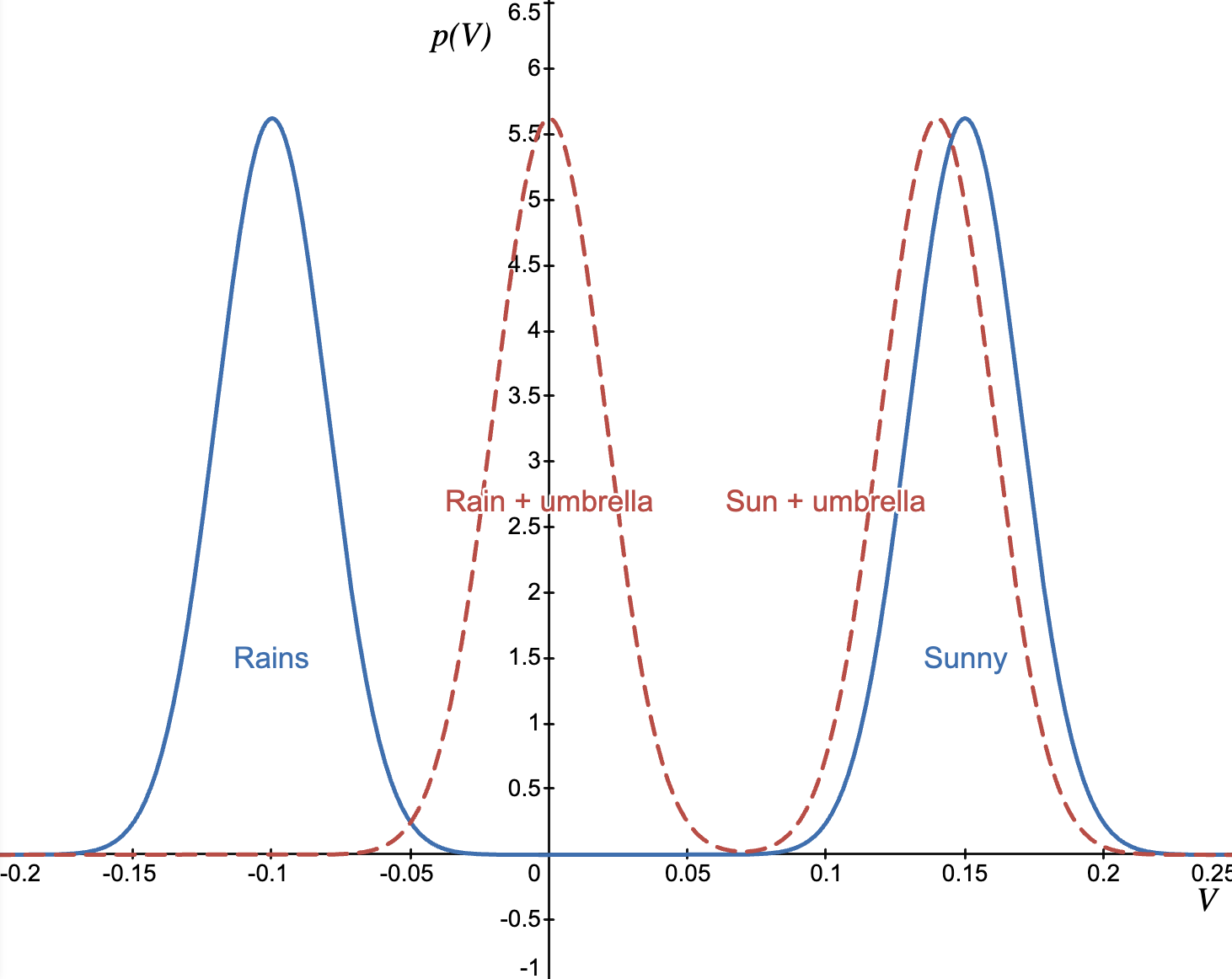

Now, if we consider taking the umbrella, , we might see potential like this:

The "rainy weather" hump has moved to the right, which means that the negative Value outcomes of the rainy case have been mostly minimized. The second "good weather" hump has moved slightly to the left, because the carrying cost of umbrella introduces a slight drag in our walking frame. It's important to note that if umbrella had no carrying costs, we'd always take it with us, because there would be only an upside. That's similar to how we tend to take our smartphone with us everywhere we go, even if we don't need it in most cases. Because it fits in the pocket, the carrying cost is practically 0.

This difference between the two potentials is marginal potential. Phenomenologically, marginal potential is the felt difference between two counterfactual frames, and this difference is intuitively assigned or associated to the entities, which we're using to transform our frame. A “thing” transforms the frame (and thus the distribution) .

The question is whether the transformation is useful? Just by looking at the transformed shapes above it's not obvious which one is better. The shapes (and associated emotions) cannot simply be subtracted from one another. They have to be compared by an aspect that we find relevant. If the aspect that we're interested in is expected Value then we subtract expected Value of from and see if the result is positive.

But we're not merely rational agents that look only at expected Value, otherwise gambling and lotteries wouldn't exist. We can define a summary functional that encodes other aspects of the potential/feeling that we care about: risk-aversion, downside safety, etc. Just like expected Value, these operations pull out a numerical aspect from the distribution to afford a meaningful comparison between the two frames.

The marginal potential of in frame , under functional, can be defined as:

Humans don’t use the same rule everywhere. In safety-critical contexts we are tail-focused; in exploration we tolerate variance. lets the same formalism serve different aims. Because can be chosen to reflect what the frame cares about, directly measures the meaningful contribution of (tool vs obstacle) in that context. If we care about trust or fear of ruin, we can compute the under the right functional, and have a decision signal for whether to include .

Here are some simple functionals:

Expected value:

Measures the average gain/loss and assumes risk neutrality. Use for planning under mild risk when the mean is a sufficient summary; it ignores variance, tails, and multimodality.Trust coefficient:

Captures whether the distribution leans positive or negative, disregarding magnitudes. Good for quick go/no-go judgments and signaling “more good than bad branches,” but insensitive to how good or bad.Risk-averse utility: with concave

Encodes diminishing returns and loss aversion via the shape of . Choose to reflect the frame’s attitude to risk; if is linear, it reduces to expected value.